CollabCam: Deep Learning based System for Energy-Efficient Pervasive Vision

Links:

Problem: Deploying deep-learning–based visual analytics across multiple cameras often incurs high network bandwidth and energy consumption—especially when cameras transmit full-resolution images even for overlapping scenes.

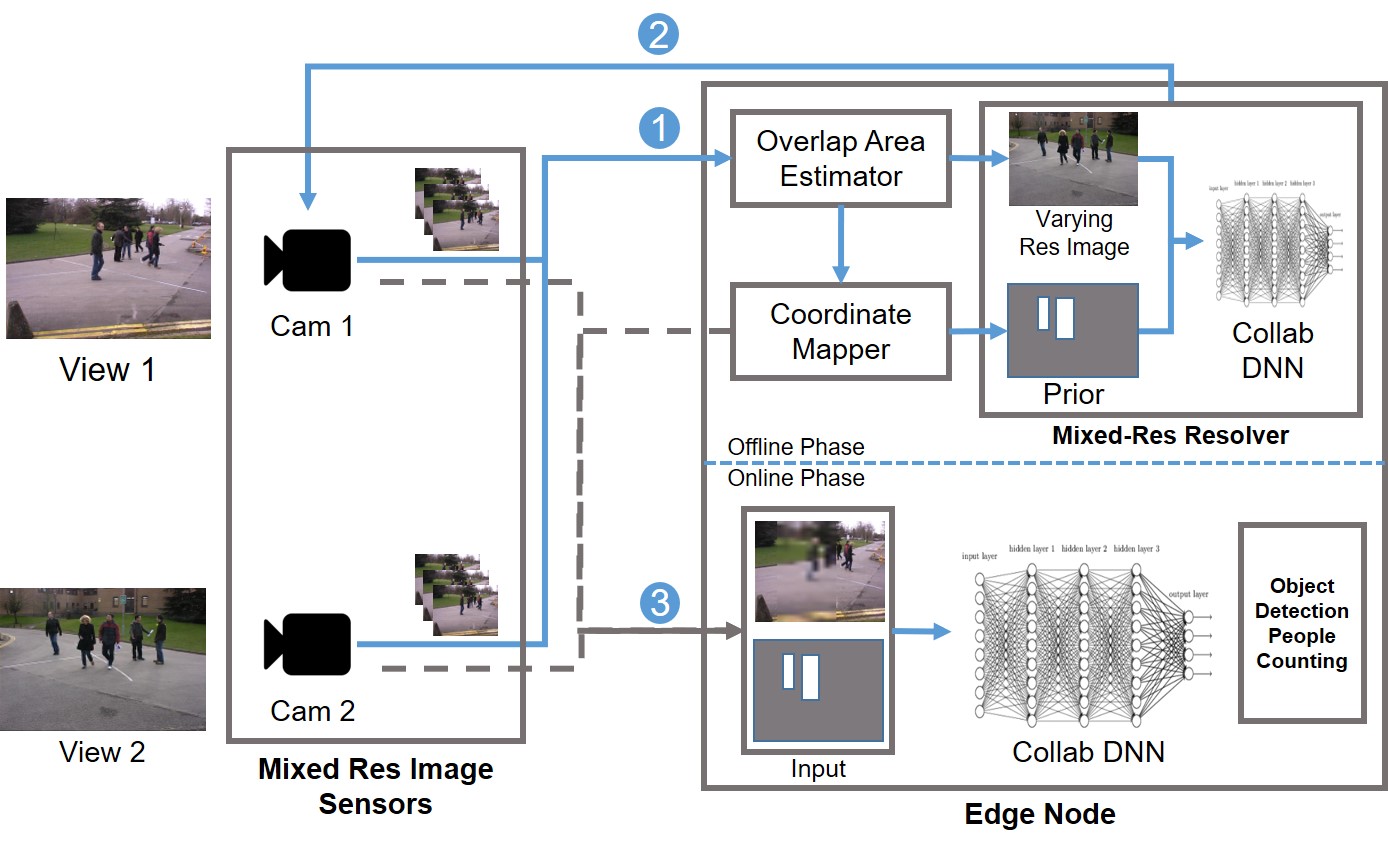

Approach & Contributions: CollabCam introduces:

- Mixed-Resolution Frame (MRF): Each camera transmits low‑resolution image data for overlapping regions, drastically reducing bandwidth.

- Collaborative Inference: Cameras share translated object bounding boxes with peers as auxiliary input, enabling off-the-shelf object detectors (e.g., YOLOv3, SSD) to be retrained for collaboration without structural changes.

System Architecture:

Experimental Results: In benchmark outdoor campus datasets, CollabCam achieves a 50–60× reduction in frame size with only a ≤2–5% drop in object detection accuracy, compared to >45–60% degradation in non‑collaborative baselines. In a Raspberry Pi prototype, it reduced energy/image frame overhead by 25–35%, and up to 45% with hardware optimization.

Impact: CollabCam demonstrates that efficient, energy-aware, edge‑based collaboration between cameras can enable scalable vision analytics in resource-constrained, real‑world deployments without major accuracy loss.